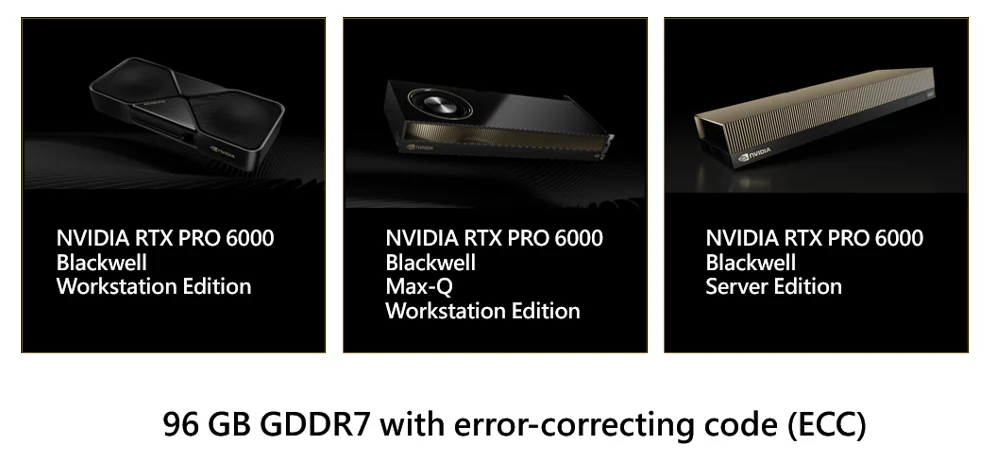

NVIDIA has introduced the RTX PRO™ 6000 Blackwell series GPUs for workstations and data centers: the Workstation Edition, Max-Q Workstation Edition, and Server Edition. All three models feature a massive 96GB GDDR7 memory upgrade, significantly accelerating computing, AI training / fine-tuning / inference, ray tracing, and neural rendering technologies. They deliver powerful next-generation computing performance and multi-GPU scalability, redefining workflows for AI, technical, creative, engineering, and design professionals.

The next-generation NVIDIA RTX PRO 6000 Blackwell fully adopts GDDR7 ECC memory, doubling the capacity of the previous Ada generation, reaching a whopping 96GB per single card and supporting error correction code (ECC), ideal for handling large-scale 3D models and AI projects. It uses PCIe 5.0 x16 connectivity for up to 64GB/s bandwidth, significantly improving data transfer efficiency between CPU and GPU, while offering excellent expansion flexibility.

Looking at today's most talked-about AI applications, large language models (LLMs) have become the technological core, widely used in scenarios such as Q&A systems, chatbots, data summarization, and programming assistance. These models rely on massive computing power and memory resources during inference and reasoning, requiring multi-step processing to produce accurate results. When extended into Physical AI applications, simulation, testing, training, and inference must first be conducted in virtual environments before they can be deployed in the real world. These processes are highly complex and involve the integration and collaboration of multiple AI technologies, further highlighting the critical role of high-performance GPUs and large memory in modern AI development.

Additionally, the fifth-generation Tensor Cores on the NVIDIA RTX PRO 6000 Blackwell accelerate the deep learning matrix computations required for neural network training and inference, boosting computational throughput by up to 3 times. It also adds FP4 precision while supporting TF32, BF16, FP16, FP8, and FP6 data types. Using the same Flux.dev model test, generating an image with FP4 precision only requires 10GB of VRAM and 3 seconds, whereas the previous generation using FP16 precision required 23GB of VRAM and 20 seconds. This not only yields similar generation results but also significantly shortens generation time, improves performance, and reduces memory requirements, allowing professionals to run more applications simultaneously.

The power of NVIDIA RTX PRO Blackwell GPUs extends beyond hardware. It also provides a complete AI development environment based on CUDA-X microservices. The CUDA-X libraries, built on CUDA, simplify the process of using NVIDIA's accelerated platform for data processing, AI, and high-performance computing. Engineers can easily build, optimize, deploy, and scale applications using the CUDA platform, whether on PC, workstation, cloud, or supercomputer.

Compared to the previous-generation RTX 6000 Ada GPU, the NVIDIA RTX PRO 6000 Blackwell Workstation Edition GPU offers a 3.8x improvement in LLM inference performance, 2.5x in text-to-image generation, 7.1x in genomics, 15.2x in video editing, 2.5x in Omniverse 3D simulation and collaboration platform, and 2.4x performance upgrade in rendering and graphics.

Further testing shows that the new generation NVIDIA RTX PRO 6000 Blackwell Workstation Edition achieves an astonishing 5.7x AI performance increase in the LLM Mistral test. In the AI inference Geekbench AI test, it shows a 1.9x improvement. For AI inference DeepSeek R1 and AI training GraphSAGE, both demonstrate a 2.1x lead. In data science RAPIDS cuDF, there's a 1.8x performance boost.

Unlike NVIDIA HGX's rack-mounted server platforms, the NVIDIA RTX PRO 6000 Blackwell series is primarily designed for desktop PCs, workstations, and multi- GPU servers, allowing professional users to flexibly configure the required equipment based on their needs. It is available in Workstation Edition, Max-Q Workstation Edition, and Server Edition.

NVIDIA RTX PRO™ 6000 Blackwell Series (Image from Leadtek)

NVIDIA RTX PRO™ 6000 Blackwell Series (Image from Leadtek)

The Workstation Edition of the NVIDIA RTX PRO 6000 Blackwell GPU features the same Founder's Edition cooling design as the desktop RTX 5090, but in an all-black aesthetic. It uses a dual-slot design, with a height of 5.4 inches and a length of 12 inches. As a professional workstation card, it is a versatile GPU that can accelerate AI development, AI inference, data science, HPC computing, AI rendering and graphics, video content and streaming, as well as game development and other professional tasks.

The NVIDIA RTX PRO 6000 Blackwell Workstation Edition GPU boasts 4000 TOPS AI performance, 380 TFLOPS RT core performance, and 125 TFLOPS single-precision performance. It features 24,064 CUDA cores and 96GB of GDDR7 ECC memory. It has a maximum total board power of 600W and uses a PCIe Gen5 16-pin power connector. It also supports Multi-Instance GPU (MIG), capable of splitting into up to four independent 24GB GPUs.

For display output, there are four DisplayPort 2.1 ports, supporting up to four 4K@165Hz or two 8K@100Hz displays. It also supports RTX PRO Sync, Mosaic, and RTX desktop management software.

The NVIDIA RTX PRO 6000 Blackwell Workstation Edition delivers the most powerful single-card AI performance and a massive 96GB memory capacity, enabling a desktop PC to meet the performance requirements of today's AI development.

The NVIDIA RTX PRO™ 6000 Blackwell Max-Q Workstation Edition features a 300W total board power limit and a dual-slot design. Measuring 4.4 inches in height and 10.5 inches in length, it utilizes active blower-style cooling. It is designed to enable lower-power workstations to scale to multi-GPU configurations with the RTX PRO 6000 Blackwell Max-Q, thereby achieving greater total memory capacity.

With a single card's 300W power consumption, 4 GPUs can achieve a total memory capacity of 384GB in a single workstation. This only requires a 1500W-2000W power supply, enabling desktop workstations to handle complex AI training and fine-tuning tasks. In terms of performance, a single card provides 3511 TOPS AI performance, 333 TFLOPS RT core performance, and 110 TFLOPS single-precision performance.

The simultaneously launched Server Edition of the NVIDIA RTX PRO 6000 Blackwell GPU, although featuring the same memory specifications, has a key difference in its adjustable board power between 400W and 600W. It uses a dual-slot design, 4.4 inches high and 10.5 inches long, with a passive cooling system relying on active airflow in rack servers for heat dissipation.

In terms of performance, it delivers 117 TFLOPS single-precision performance, 3.7 PFLOPS FP4 AI performance, and 354.5 TFLOPS RT core performance. It also provides four DisplayPort 2.1 outputs. In short, the NVIDIA RTX PRO 6000 Blackwell Server Edition uses a passive heatsink and follows standard full-length card design, enabling flexible GPU expansion in rack servers.

In terms of performance, compared to the L40S, the NVIDIA RTX PRO 6000 Blackwell Server Edition delivers up to a 5.6x improvement in LLama3 8B / 70B inference output, and a 2x upgrade in model fine-tuning performance. In Mixtral8 / 7B, inference output is 3.6x faster, and model fine-tuning sees a 1.8x performance upgrade.

NVIDIA Omniverse is based on OpenUSD, combined with RTX real-time rendering and generative physical AI, integrated into existing software tools and simulation workflows. It provides a training platform for AI automation systems in large-scale, accurate physical simulation environments. Compared to the L40S, the NVIDIA RTX PRO 6000 Blackwell Server Edition achieves a 2.2x performance boost in Omniverse real-time rendering, and a 1.8x improvement in robot sensing simulations, helping drive forward applications in digital twins and robotics development.

The NVIDIA RTX PRO 6000 Blackwell series, including the Workstation, Server, and Max-Q Workstation Editions, allows professional users to select the appropriate GPU based on system power consumption, add-in card size, and cooling method. It leverages excellent AI processing capabilities to accelerate the latest advanced models and handle complex design, simulation, rendering, video editing effects, and other creative workflows.

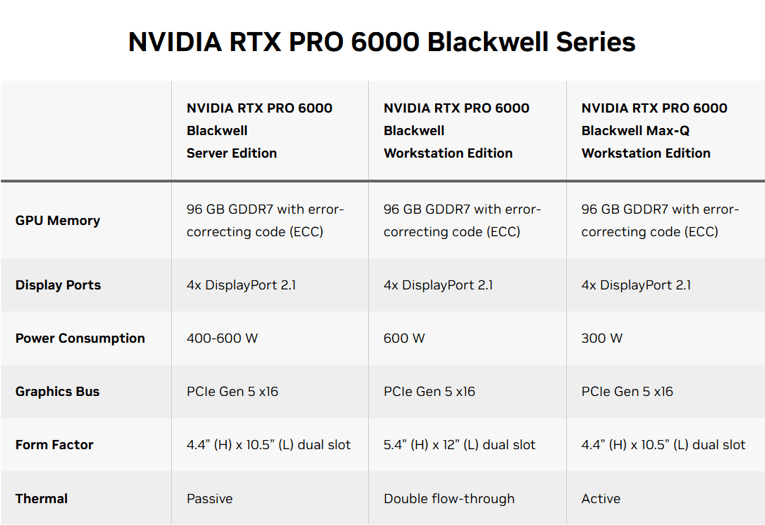

NVIDIA RTX PRO™ 6000 Blackwell Specifications。Source: NVIDIA website。

NVIDIA RTX PRO™ 6000 Blackwell Specifications。Source: NVIDIA website。

The NVIDIA RTX PRO 6000 Blackwell is distributed by NVIDIA's most representative long-term partner, Leadtek, which has long been deeply involved in the professional GPU field. Leadtek not only possesses strong technical expertise, but also offers complete technical support and localized service systems, helping enterprises quickly implement high-performance computing solutions.

This year, Leadtek has further expanded its presence by becoming the designated supplier for the NVIDIA DGX Spark desktop personal AI supercomputer, actively promoting the rapid adoption of AI across various industries.

Source:news.xfastest.com